NVIDIA Expands Its Reach as Meta and Oracle Adopt Spectrum-X Ethernet to Power Next-Generation AI Systems

Silicon Valley’s New Battleground: Inside NVIDIA’s Bold Play to Rewire AI’s Nervous System

Meta and Oracle embrace Spectrum-X Ethernet in a move that could reshape the architecture of artificial intelligence—and test the limits of one company’s influence

SAN JOSE, Calif. — The data centers driving today’s artificial intelligence boom are hitting a wall. Their neural networks keep getting bigger, but the digital “pipes” connecting them just can’t keep up.

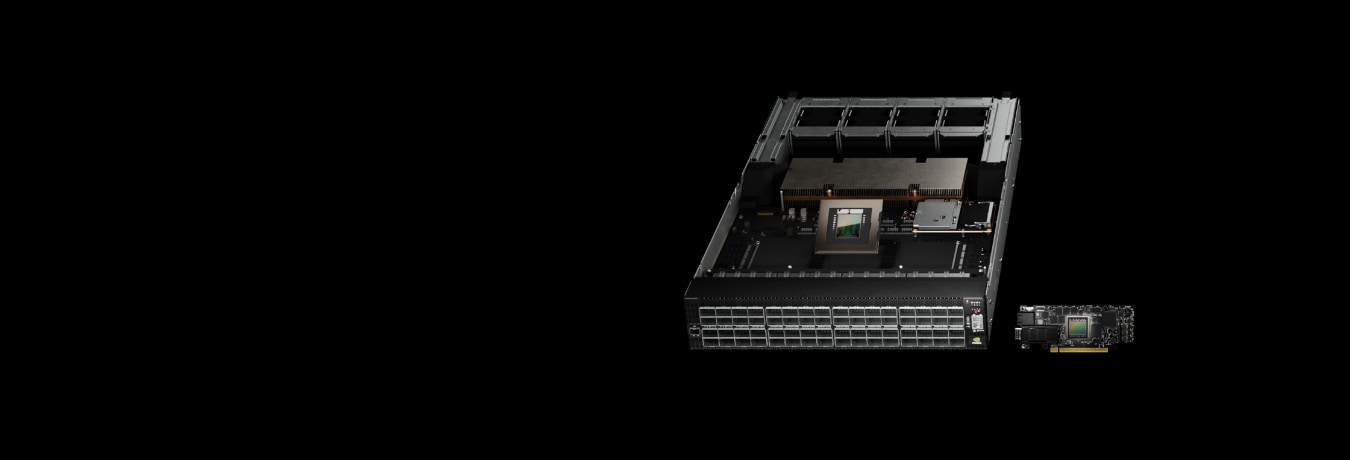

On Monday, NVIDIA revealed that both Meta and Oracle plan to roll out its Spectrum-X Ethernet switches across their massive AI infrastructure. The move pushes NVIDIA’s dominance beyond chips and straight into the connective tissue that lets machine learning systems think and talk to themselves.

It’s a big deal for both companies, though for different reasons. Meta, famous for its open-source, multi-vendor approach, and Oracle, known for its tight, all-in-one integration, rarely walk the same path. Their shared vote of confidence in NVIDIA marks a possible turning point in how tech giants build what Jensen Huang, NVIDIA’s CEO, likes to call “AI factories.”

But beneath the excitement lies an industry-wide question: will the future of AI run on tightly controlled, vertically integrated systems owned by single vendors—or on open, flexible standards that keep the market competitive and buyers in control?

When Networks Become Bottlenecks

The issue boils down to physics. As today’s language models balloon to trillions of parameters, they rely on thousands of GPUs constantly exchanging data—gradient updates, model weights, and everything in between. That nonstop back-and-forth floods the network with enormous bursts of traffic.

Traditional Ethernet gear simply wasn’t built for that kind of punishment. Analysts estimate that typical data-center networks hit only about 60% effective throughput during AI training. The rest gets swallowed by congestion, buffer delays, and traffic collisions. Every lost percentage point means idle GPUs—millions of dollars in hardware sitting around, twiddling their digital thumbs.

NVIDIA claims its Spectrum-X system changes that math. By pairing purpose-built switches with custom network cards and AI-tuned software, the company says it can push utilization to 95%. The platform predicts where congestion will form and reroutes data before it becomes a problem. Those numbers, NVIDIA says, come straight from its own supercomputers—though outside validation in real-world, multi-vendor setups is still limited.

The Hyperscaler Equation

Of the two adopters, Meta’s move turns more heads. The company practically wrote the book on open networking, avoiding vendor lock-in by mixing and matching gear from Broadcom, Arista, and others. So seeing Meta fold NVIDIA technology into its Minipack3N switches and Facebook Open Switching System software suggests something significant: either NVIDIA’s hardware really is that far ahead, or Meta has decided that AI performance and network design can no longer be treated separately.

Insiders whisper that Meta isn’t throwing the baby out with the bathwater. Instead, it’ll likely run Spectrum-X alongside its existing Broadcom infrastructure—hedging its bets while keeping options open.

Oracle’s play, by contrast, fits its usual pattern. The company has built its cloud strategy around deep collaboration with NVIDIA. Its adoption of Spectrum-X aligns with the next-gen “Vera Rubin” architecture—designed to link millions of GPUs into what the partners call “giga-scale AI factories.” For Oracle, the logic is simple: when speed and reliability matter most, integration beats modularity every time.

The Merchant Silicon Counterpunch

NVIDIA’s momentum hasn’t gone unnoticed. Broadcom, whose chips form the backbone of much of the internet, recently landed a major AI networking deal with OpenAI, built around its own Ethernet-optimized designs. Cisco is pushing its Silicon One P200 chips for the long-haul connections that link data centers across continents, with Microsoft and Alibaba already on board.

Meanwhile, the Ultra Ethernet Consortium—a heavyweight alliance including AMD, Arista, Broadcom, Cisco, Intel, Meta, and Microsoft—has launched its 1.0 spec. Its goal? Match NVIDIA’s performance without locking customers into one vendor. In other words, it’s a coordinated strike against proprietary stacks.

The stakes couldn’t be higher. Analysts expect AI data-center networking to pull in between $80 and $100 billion in switch revenue over the next five years. As Ethernet speeds jump from 800 Gbps to 1.6 Tbps, NVIDIA’s challenge is clear: hold its lead while the rest of the industry races to catch up.

Parsing Performance Claims

That 95-versus-60-percent throughput claim sounds impressive, but context matters. NVIDIA’s numbers come from its own test labs, under ideal conditions and workloads tuned for its gear. Out in the wild—where networks are messy, mixed, and unpredictable—the results can look very different.

Competitors aren’t buying the full story either. Engineers familiar with Broadcom’s Jericho4 deployments say their systems can reach efficiency rates in the high 80s when tuned properly. The remaining gap, they argue, has less to do with hardware and more to do with how networks are architected and optimized.

Still, there’s no denying NVIDIA’s edge in integration. Its network cards, switches, software libraries, and congestion controls all operate as one cohesive organism. That’s hard to replicate in the open ecosystem, where multiple vendors have to play nice. Whether customers will accept less flexibility for that performance boost depends on how much they value freedom over speed.

The Scale-Across Gambit

The next frontier isn’t just bigger models—it’s spreading them out. NVIDIA’s new Spectrum-XGS technology aims to connect entire data centers into unified, global training clusters. As models grow too large for a single site, and as power-hungry GPU farms chase affordable energy across regions, the ability to coordinate training across continents becomes crucial.

Here, NVIDIA faces Cisco head-on. Cisco’s deep-buffer silicon is tailor-made for long-haul AI traffic. The battle won’t be decided by sheer specs, but by how well each platform handles the latency and failures that come with distributed training jobs. When you’re syncing GPUs across thousands of miles, even a hiccup can derail an entire run.

Investment Perspectives and Forward Signals

For investors and analysts, the business angle is clear. Networking could become NVIDIA’s next big profit engine. Beyond the switches themselves, there’s money in network cards, optics, and the software glue holding it all together. If NVIDIA grabs even 10 to 20 percent of the AI Ethernet market, analysts say it could bring in low double-digit billions in yearly revenue by 2027—with juicy mid-30s margins to match.

But competition is heating up fast. Broadcom’s OpenAI partnership proves merchant silicon still matters. The Ultra Ethernet Consortium’s push for open standards could squeeze NVIDIA’s pricing power within the next two to three years if performance parity is achieved. Historically, hyperscalers like Meta prefer to buy from multiple sources, which suggests they won’t hand NVIDIA the keys without a backup plan.

For equipment makers, the ripple effects vary. Arista Networks faces the sharpest threat since NVIDIA’s hardware now overlaps with its turf. Cisco might find its niche in long-haul connectivity, but it’ll need to move quickly. Broadcom remains resilient thanks to its diverse customer base, even if it loses some share in specific AI accounts.

Key data points to watch: how Meta and Oracle’s real-world deployments perform; how demand shifts for 1.6-terabit optics; and whether regulators start questioning NVIDIA’s growing ecosystem control.

And as always, investors should remember—past performance isn’t destiny. The semiconductor networking scene moves fast, shaped by tech leaps, competitive surprises, and shifting standards. Staying informed and diversified isn’t just smart—it’s essential.

The Open Question

In the end, the core dilemma remains. Will AI infrastructure consolidate around tightly integrated platforms chasing every last drop of performance? Or will it stay open, distributed, and competitive?

Monday’s announcement nudges the pendulum toward integration, but it also lights a fire under the open-standard camp. Both sides know the race isn’t just about raw speed—it’s about who controls the architecture of the AI era itself.

What Meta and Oracle build from here—and whether they keep the door open to alternatives—will tell us which vision wins in this new age of giga-scale intelligence.

NOT INVESTMENT ADVICE