The Code Whisperers: How AI Assistants Are Reshaping Software Development — and Why They're Not Ready for Prime Time

In the gleaming offices of Silicon Valley and coding bootcamps around the world, a quiet revolution is underway. Developers are typing less and thinking more, as artificial intelligence tools promise to handle the grunt work of programming. But six months into 2025, the honeymoon phase with AI coding assistants is revealing some uncomfortable truths about the gap between promise and performance.

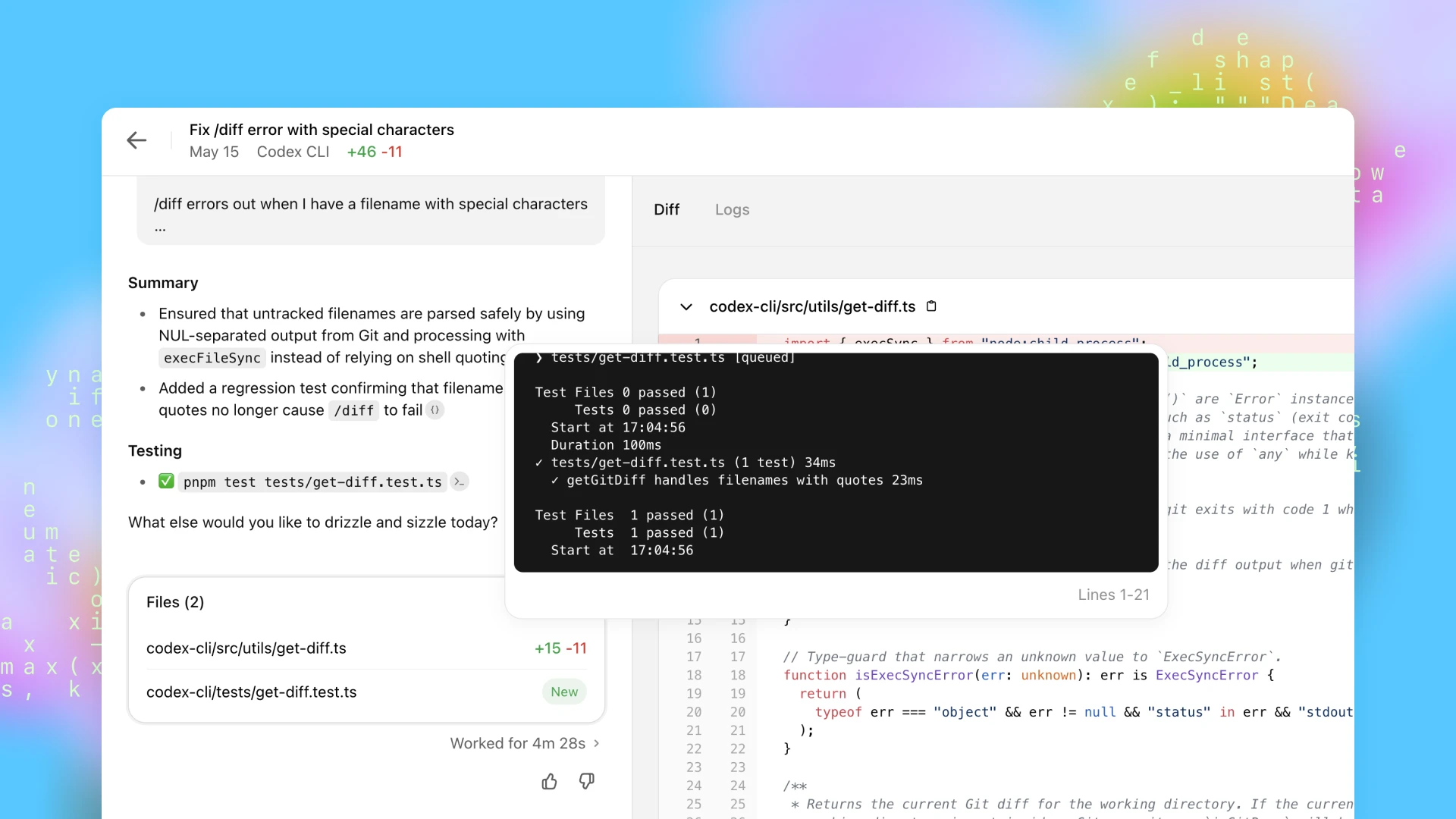

The latest batch of AI-powered development tools — OpenAI's Codex, Claude Code, SWE-agent, and Cursor — has captured the imagination of programmers worldwide. Yet user feedback paints a complex picture: these tools can boost productivity dramatically for certain tasks while failing spectacularly at others, often within the same coding session

Comparison of Agentic Programming Tools

| Aspect | OpenAI Codex | Claude Code | SWE-agent | Cursor |

|---|---|---|---|---|

| Introduction Date | May 16, 2025 | February 2025 (beta) | February 2025 (v1.0) | Not specified (existing tool with updates) |

| Base Model | Codex-1 (fine-tuned version of OpenAI-o3) | Claude 3.7 Sonnet | Any LM of choice (e.g., GPT-4o, Claude Sonnet 4) | Mix of purpose-built and frontier models |

| Integration | Cloud-based, ChatGPT sidebar (Pro, Team, Enterprise; soon Plus, Edu) | Terminal-based | GitHub issues, local or cloud | VS Code embedded |

| Capabilities | Automates coding tasks (refactoring, tests, etc.) | Routine tasks, git workflows, refactoring, etc. | Fixes GitHub issues, coding challenges, cybersecurity | Code generation, smart rewrites, agent mode |

| User Interaction | Chat-based via ChatGPT | Natural language in terminal | Command-line interface, configurable | Natural language or code in VS Code |

| Context Understanding | Preloaded cloud repositories | Local access via terminal | Access to GitHub repositories | Local access to entire codebase |

| Security | Isolated cloud sandboxes, internet-free | Local terminal operation | Sandboxed code execution, local/cloud | Local operation, Privacy Mode |

| Pricing | Part of ChatGPT subscriptions (Plus, Pro, Team, Enterprise) | Beta, likely free/restricted | Open-source (MIT), free | Subscription plans starting @ $20/month |

| Strengths | - Multi-language support (12+ languages) - Workflow integration (GitHub, VS Code) - Voice-to-code accessibility - Safety and transparency | - Strong reasoning and high-quality code - Productivity gains for complex tasks - Framework integration - Generous usage on Max plan | - State-of-the-art on benchmarks (12.47% on SWE-bench) - Rapid execution - Flexible LM integration - Automation of debugging | - Significant productivity boost - Intelligent, context-aware suggestions - Seamless VS Code integration - Continuous feature updates |

| Weaknesses | - Unreliable for non-trivial tasks (40–60% success rate) - Workflow frustrations (multi-step refactors) - Environment and internet limitations - Stability and maturity concerns | - High cost and restrictive usage caps - No native IDE integration - Basic terminal UI - Generic suggestions | - Low real-world success rate (12.47%) - Limited scope (Python, clean repos) - Enterprise adoption barriers - Outpaced by newer agents | - Agentic mode unreliable for complex projects - Context loss in large codebases - UI clutter and performance issues - Learning curve for advanced features |

The Productivity Paradox: When AI Coding Works

Sarah Chen, a senior developer at a fintech startup, describes her experience with Cursor as transformative. "It's like having a junior developer who never sleeps," she explains. "For refactoring legacy code or writing unit tests, it's incredible. I can focus on architecture while it handles the tedious stuff."

This sentiment echoes across developer communities. OpenAI's Codex, integrated into ChatGPT for premium users, excels at what one industry observer calls "near-infinite army of junior developer" tasks — fixing typos, adding utility functions, and automating small maintenance chores. The tool supports over 12 programming languages and has become particularly valuable for Python and JavaScript developers.

Claude Code has earned praise for its reasoning capabilities, with users reporting significant productivity gains. Some developers switching from competing tools describe output increases that justify the premium subscription costs, despite sticker shock. The tool's ability to handle complex business intelligence and analytics tasks has made it particularly popular among data scientists and analysts.

SWE-agent, meanwhile, made headlines by achieving a 12.47% issue resolution rate on the SWE-bench evaluation — a dramatic improvement over previous approaches that managed only 3.8%. The tool can resolve GitHub issues in under a minute, representing a quantum leap in automated debugging capabilities.

The Reliability Reckoning: Where AI Falls Short

But the enthusiasm comes with significant caveats. Users consistently report that these tools work well for straightforward tasks but struggle with complex, multi-step projects that require deep contextual understanding.

"Success rates for non-trivial tasks hover around 40-60%," notes one developer survey. "That's not terrible, but it's not reliable enough to trust for critical work."

The problems are particularly acute with workflow management. OpenAI's Codex forces developers to open new pull requests for each iteration, making multi-step refactors cumbersome. Error messages are often unhelpful, and the tool's sandboxed environment lacks internet connectivity, limiting its ability to resolve dependencies or install packages.

Claude Code, despite its sophisticated reasoning, suffers from workflow friction. The lack of native IDE integration means developers must constantly copy and paste between the tool and their development environment. "It's like having a brilliant consultant who can only communicate through written notes," one user complained.

The Enterprise Hesitation: Security and Cost Concerns

Perhaps most telling is the resistance from enterprise environments. Despite the technical capabilities, many organizations remain wary of AI coding tools due to security, compliance, and policy concerns.

"Our CTO comes from big tech, but we still can't use ChatGPT or similar agents," reports one developer on a major tech forum. "The security team won't budge on the policy."

Cost remains another significant barrier. Claude Code users frequently cite the tool as "ridiculously expensive," with usage caps that can be reached quickly during intensive coding sessions. The premium pricing creates a value proposition challenge: the tools work well enough to be useful but not reliably enough to justify the cost for all use cases.

The Innovation Arms Race: Rapid Obsolescence

The field is moving so quickly that today's breakthrough becomes tomorrow's baseline. SWE-agent's initial success was quickly overshadowed by newer open-source alternatives achieving 65-70% success rates. This rapid pace of innovation creates both opportunity and uncertainty for developers trying to choose the right tools.

Cursor has responded to this challenge with frequent updates, adding new features every few weeks. However, some users report that recent updates have actually degraded the reliability of agentic features, particularly when handling large or complex projects.

"The agent skips steps, makes incorrect assumptions, or fails to provide comprehensive analyses," notes one frustrated user. "It's becoming worse over time for complex tasks."

Investment Implications: Betting on the Future of Code

The AI coding assistant market represents a significant investment opportunity, but one fraught with competitive risks. The rapid pace of innovation means that today's market leaders could quickly become tomorrow's footnotes.

From an investment perspective, several trends emerge from user feedback. Tools that focus on specific niches — like data analysis or debugging — may have more sustainable competitive advantages than general-purpose coding assistants. Enterprise adoption will likely favor tools that prioritize security and compliance over cutting-edge features.

The subscription model appears sustainable for tools that deliver consistent value, but usage-based pricing may limit adoption for high-volume users. Companies that can solve the integration challenge — seamlessly embedding AI assistance into existing developer workflows — may capture disproportionate market share.

Investors should also consider the infrastructure requirements. The computational costs of running sophisticated AI models create both barriers to entry and ongoing operational challenges. Tools that can deliver comparable results with lower computational overhead may have significant cost advantages.

The Road Ahead: Maturity Through Iteration

The consensus among developers is cautiously optimistic. These tools represent a genuine advancement in software development productivity, but they're not yet mature enough to fundamentally change how complex software is built.

"We're in the early innings," explains one industry analyst. "These tools are great for speeding up routine tasks, but they're not replacing the need for experienced developers to think through complex problems."

The next phase of development will likely focus on improving reliability and integration. Tools that can maintain context across large codebases, provide better error handling, and integrate seamlessly with existing development environments will likely see the strongest adoption.

Enterprise features — security, compliance, and policy management — will become increasingly important as organizations move beyond individual developer adoption to team-wide deployment.

Conclusion: The Pragmatic Path Forward

The AI coding revolution is real, but it's more evolution than bigbang-ish revolution. These tools are most effective when used as sophisticated assistants rather than replacements for human judgment and creativity.

For developers, the current generation of AI coding tools offers genuine productivity benefits for specific tasks while requiring careful management of expectations for complex work. The key is understanding where each tool excels and where human expertise remains irreplaceable.

For enterprises, the decision to adopt these tools requires balancing productivity gains against security concerns and cost considerations. The most successful implementations will likely be those that integrate AI assistance into existing workflows rather than attempting to replace them entirely.

As the technology matures, the tools that survive will be those that solve real problems reliably rather than those that generate the most hype. In the fast-moving world of AI development, substance ultimately matters more than sensation.

Disclaimer: This analysis is based on current market data and user feedback. Technology markets are highly volatile, and past performance does not guarantee future results. Readers should conduct their own research and consult with qualified advisors before making investment decisions.