Fidelity Leads $1.1 Billion Investment in AI Chip Maker Cerebras as Company Claims Speed Advantage Over Nvidia GPUs

Silicon Insurgency: Cerebras’ $1.1 Billion Bet Shakes Up AI’s Hardware Battlefield

Fidelity leads a massive round valuing Cerebras at $8.1 billion, signaling that the AI chip wars are far from settled.

SUNNYVALE, Calif. — Nvidia may still dominate the world of AI computing, but its grip is starting to loosen. Today, Cerebras Systems raised an eye-popping $1.1 billion in an oversubscribed Series G round. The deal, led by Fidelity Management & Research alongside Atreides Management, pushes the startup’s valuation to $8.1 billion and cements it as one of the boldest challengers yet to Nvidia’s GPU empire.

This funding arrives at a crucial turning point. The industry’s attention has shifted from training gigantic language models to actually running them in production. That shift exposes the weaknesses of existing hardware and opens the door for companies building chips with one job in mind: fast, reliable inference.

Speed Is Now the Real Weapon

Money is pouring in because inference—the act of putting models to work—has become the industry’s choke point. Training once grabbed all the headlines, but today companies care about shaving milliseconds off response times. Every delay can frustrate users, slow down tools like code assistants, or even hurt competitive positioning.

Cerebras says its massive wafer-scale systems already run more than 20 times faster than Nvidia GPUs on leading open-source models, according to benchmarks from third-party firm Artificial Analysis. The company claims to serve trillions of tokens every month, not just in labs but across customer data centers, cloud infrastructure, and partner platforms.

The customer list reads like a who’s who of tech. AWS, Meta, IBM, and hot startups such as Mistral and Notion are on board, along with the U.S. Department of Energy and Department of Defense. Even Hugging Face developers seem to prefer Cerebras: it’s now the top inference provider on the platform, handling more than five million monthly requests.

Building Chips at Home

Cerebras plans to channel much of this new capital into U.S. manufacturing and domestic data centers. The timing couldn’t be better. Washington is pushing for semiconductor independence through the CHIPS Act, and agencies want to reduce their reliance on a single vendor for national security reasons.

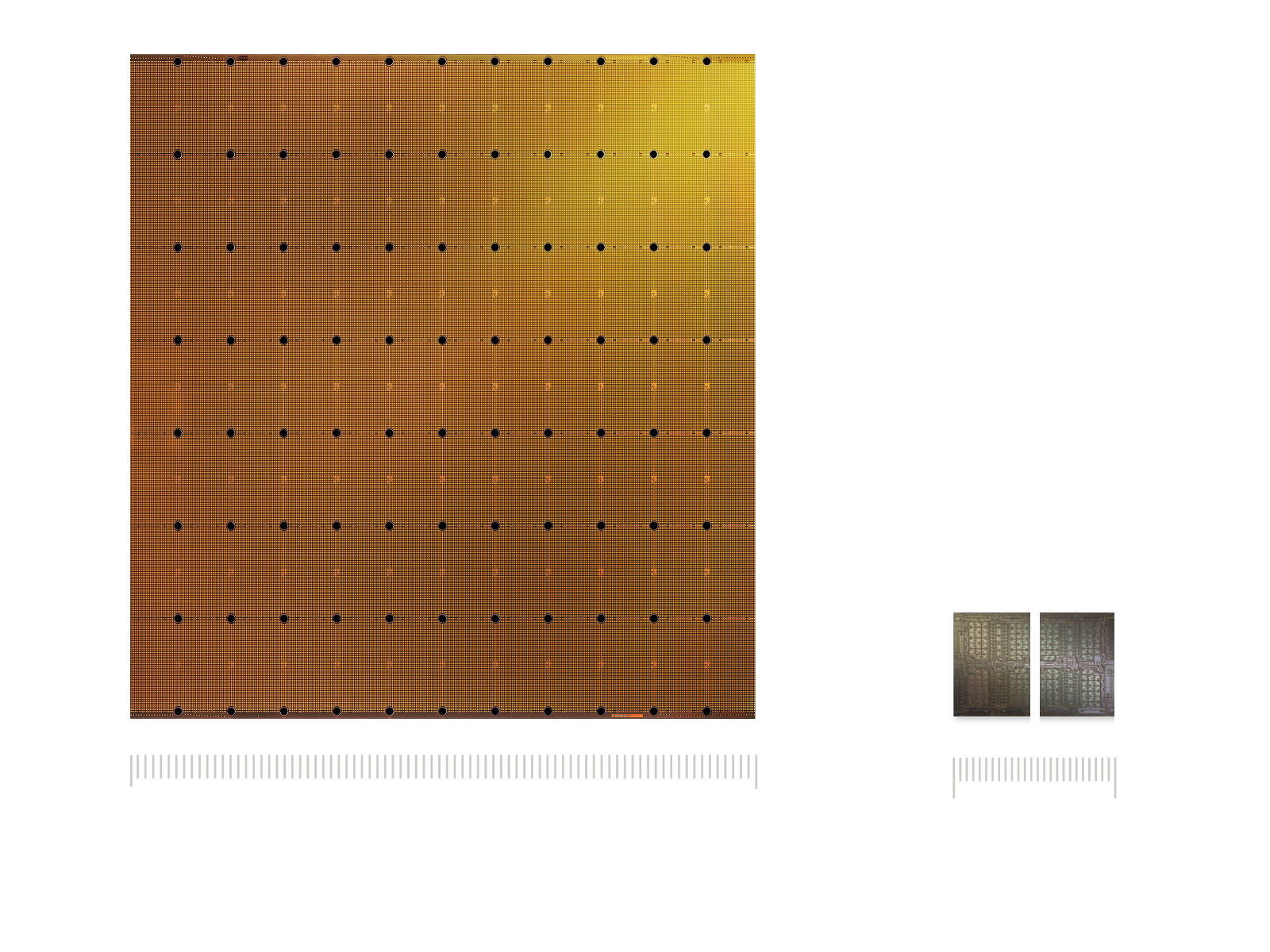

The company’s strategy borrows a page from Nvidia’s playbook: control the full stack, from chip design to system architecture to cloud delivery. The difference? Instead of general-purpose GPUs, Cerebras is betting on specialized wafer-scale processors designed exclusively for inference workloads. In an era where Moore’s Law no longer delivers easy gains, specialization could become the trump card.

A Broader Shift in AI Infrastructure

Cerebras isn’t alone in chasing this opportunity. Competitors and adjacent players are raising huge sums as well. Groq closed $750 million in September, riding contracts from the Middle East. Photonics firms like Celestial AI, Lightmatter, and Ayar Labs have together pulled in over $800 million to fix bandwidth bottlenecks.

Big tech giants are also building their own chips: Meta’s MTIA, Microsoft’s Maia, AWS’s Inferentia, and Google’s TPUs. Each represents billions of dollars aimed at cutting dependence on Nvidia. Collectively, these moves confirm one thing: the economics of inference justify heavy investment, even if not every bet will pay off.

Analysts expect AI infrastructure spending to soar to nearly half a trillion dollars by 2026 and possibly $2.8 trillion by 2029. But those numbers hinge on whether companies can keep utilization rates high enough to justify the buildout.

Utilization and the Hard Math

Behind the headlines lies a thornier truth. Surveys show most GPUs in enterprise clusters sit idle far more often than financial models assume. Even at peak demand, many facilities run well below their promised 80–90% utilization. That gap matters, especially when data centers are financed with billions in loans.

In fact, the industry is already leaning on exotic financing structures. In the first half of 2025 alone, firms issued $13.4 billion in data center asset-backed securities and took out more than $11 billion in GPU-backed loans. Some deals have even brushed against technical defaults before lenders offered waivers.

Add in real-world constraints—power grid shortages, slow utility upgrades, and limited supply of high-bandwidth memory—and the picture looks more fragile than many investors assume.

How Much Faster, Really?

Cerebras’ benchmark claims raise eyebrows too. Its numbers come mainly from Artificial Analysis, a firm with limited transparency compared to established standards like MLPerf. Meanwhile, Nvidia keeps squeezing more juice out of its software stack. Optimizations like TensorRT and advanced quantization can narrow performance gaps without new hardware, making head-to-head comparisons tricky.

Specialized chips often shine in narrow use cases but stumble when workloads get messy. Real-world deployments don’t look like polished benchmarks—they involve unpredictable traffic, longer context lengths, and bursty demand. That’s where economics, not marketing slides, will decide winners.

What Investors Should Really Watch

For investors, flashy throughput claims aren’t enough. The real metrics are simple: cost per thousand tokens at target latency, time to first token, energy consumed per inference, and how consistently systems hit service-level goals. Independent audits under realistic workloads would add far more credibility than cherry-picked demos.

Some analysts argue that safer bets lie in the “picks and shovels” of AI infrastructure—memory suppliers, cooling systems, utilities—because they benefit no matter which chipmaker comes out on top. Specialized inference startups like Cerebras could still thrive, but they may fit better as option-sized plays in a portfolio rather than core holdings.

And don’t forget the looming shadow of hyperscalers. As AWS, Meta, Google, and Microsoft keep rolling out their own accelerators, the market for third-party chips could shrink to niches where cost, sovereignty, or raw performance make them indispensable.

A Market on the Edge

The rush to build AI infrastructure carries echoes of the telecom boom of the 1990s, when overbuilding left a trail of stranded assets. Today’s projections demand breathtaking revenue growth to keep pace with capital flows. If that gap doesn’t close, the bubble could burst.

Outcomes range widely. In the best case, Cerebras proves its edge, wins hyperscaler adoption, and rides into the public markets as a true Nvidia alternative. In the middle scenario, it dominates select workloads while Nvidia keeps the crown elsewhere. The worst-case? Its benchmarks fade, adoption slows, and cash burn forces a sale or merger.

Investors would be wise to ask for hard evidence: audited metrics from live deployments, locked-in power deals, confirmed supply of critical memory, and clear debt structures. That’s what will separate durable challengers from hopeful dreamers.

The $1.1 billion round underscores the central question in AI infrastructure today: can specialized chips carve out real market share, or will Nvidia’s ecosystem and relentless software improvements keep it in control? The answer won’t come from PowerPoint slides or record-breaking raises. It’ll come from how well these systems perform in production, at scale, under pressure. For now, though, big-money investors are betting that Cerebras still has a fighting chance.