Open-Source AI Breakthrough Threatens Video Animation Industry Giants

New 14-billion parameter model outperforms proprietary systems, raising questions about market dynamics and competitive moats in artificial intelligence

A seismic shift is unfolding in the artificial intelligence video generation sector as Alibaba researchers release Wan-Animate-14B, an open-source model that reportedly surpasses industry-leading proprietary systems in creating realistic character animations. The development signals a potential disruption to established players commanding premium pricing for similar capabilities.

Released through an official announcement on September 19, 2025, the model demonstrates unprecedented performance in two critical areas: animating static character images to mimic human motion from reference videos, and seamlessly replacing characters within existing footage. Human preference studies indicate users favor Wan-Animate's output over both Runway's Act-Two and ByteDance's DreamActor-M1, two commercial systems that have dominated the professional market.

The Algorithm That Changes Everything

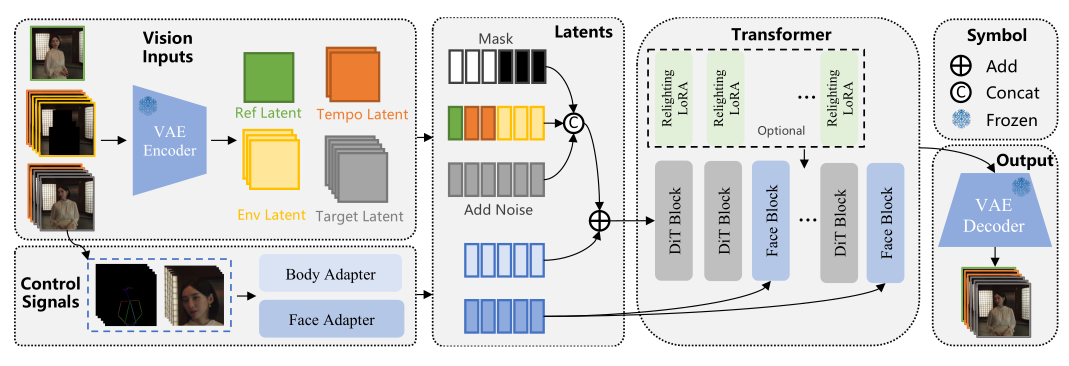

Wan-Animate's architecture represents a sophisticated fusion of existing technologies, built upon the Wan-I2V foundation model using Diffusion Transformer principles. The system's innovation lies not in revolutionary new algorithms, but in its unified approach to handling dual functionality within a single model architecture.

The model operates through two distinct modes. In animation mode, it generates videos where a source character performs expressions and movements from a driving video while maintaining the original background. Replacement mode goes further, substituting the original character in a reference video with a new character, matching lighting and environmental conditions through a specialized relighting LoRA (Low-Rank Adaptation) module specifically trained for dynamic lighting adjustment.

Our internal analysis reveals the model's sophisticated control mechanism, which decouples body motion through 2D skeletal structures from facial expressions using implicit feature extraction. The system injects spatial skeleton information directly into initial noise latents while routing facial expression data through cross-attention mechanisms in Transformer blocks. This architectural separation allows for what researchers term "holistic replication" of reference performances with remarkable fidelity.

The model's foundation on Diffusion Transformer architecture provides significant advantages over traditional UNet-based systems, particularly in temporal consistency and overall video quality. However, the computational demands are substantial, with users reporting out-of-memory errors even on high-end GPUs like the RTX 5090 when attempting 1280×720 resolution at 121 frames, often requiring resolution compromises for practical deployment.

WAN 2.2's Broader Market Validation

Wan-Animate-14B emerges from the broader WAN 2.2 model family, which has garnered significant user validation across creative communities. First-hand feedback from Reddit, X, and YouTube demonstrates substantial quality improvements in motion control, cinematic camera movements, and prompt adherence compared to the previous 2.1 version.

Our internal testing reveals WAN 2.2 delivering competitive performance against established commercial systems like Kling and Hailuo, on par with Google Veo 3, particularly in short-form cinematic content. The model's composition capabilities, dynamic camera control, and superior text rendering performance are quite outstanding. The Apache-style commercial licensing has attracted creators seeking alternatives to heavily censored commercial platforms.

However, real-world deployment reveals practical constraints that may affect broader adoption. Users report exponential increases in render times with higher step counts, with 720p videos at 15 steps producing excellent results but longer sequences showing quality degradation. The "VRAM-hungry" nature of the system forces resolution compromises even on premium hardware, with 32GB VRAM setups still requiring adjustments for extended clips.

Technical community feedback identifies persistent challenges including occasional morphing artifacts, "plastic" appearances in text-to-video outputs, and oversaturated tonal characteristics compared to commercial alternatives. Motion speed calibration remains problematic in fast-action sequences, though Lightning and LightX2V optimization workflows offer speed improvements at some quality cost.

Market Disruption Through Open Access

The release's timing coincides with growing investor scrutiny over artificial intelligence valuations and competitive sustainability. Companies like Runway, which secured funding at multi-billion dollar valuations partially based on their video generation capabilities, now face direct competition from freely available alternatives.

Our market analysis suggests the development exemplifies a broader trend where open-source initiatives challenge proprietary AI systems. Historical patterns in software development indicate that high-quality open alternatives often compress margins and force incumbents to innovate rapidly or risk obsolescence.

The model's performance against established commercial systems raises fundamental questions about the defensive moats surrounding current market leaders. Traditional advantages like data access, computational resources, and talent acquisition appear less decisive when foundational models become publicly accessible.

Computational Infrastructure and Investment Implications

Wan-Animate's resource requirements present both challenges and opportunities across the technology ecosystem. Real-world deployment data reveals the model's substantial computational demands, with users reporting memory limitations even on premium hardware configurations. The requirement for dual expert models (high-noise and low-noise), UMT5-XXL text encoder, and specialized VAE components creates a complex deployment architecture that strains traditional GPU memory configurations.

The multi-GPU implementations utilizing FSDP (Fully Sharded Data Parallel) and DeepSpeed Ulysses frameworks demonstrate the model's scalability potential, but also underscore the infrastructure investments required for practical deployment. Community reports of successful operation requiring careful memory management—with 720p resolution becoming the practical standard even on high-end systems—highlight the ongoing hardware bottleneck in AI video generation.

This computational reality creates distinct investment opportunities. Semiconductor manufacturers specializing in high-memory GPU architectures may see sustained demand as video generation workloads become mainstream. Cloud infrastructure providers offering optimized AI inference platforms could capture significant market share from organizations seeking to implement advanced video capabilities without substantial hardware investments.

The emergence of community-driven optimization techniques, including Lightning workflows and attention mechanism improvements, suggests a parallel ecosystem developing around efficient deployment solutions. Companies developing model optimization tools, memory management systems, and specialized inference hardware may find substantial market opportunities as the technology scales beyond research environments.

Content Creation Economy Transformation

The entertainment and marketing industries face potential restructuring as production costs for high-quality character animation decline dramatically, though practical adoption reveals a more nuanced transition than initially anticipated. Independent creators and small studios gain access to capabilities previously restricted to major production houses, but the technical complexity and computational requirements create new barriers to entry.

Community adoption patterns indicate a bifurcated market emerging. Professional creators develop sophisticated workflows combining WAN 2.2's cinematic capabilities with post-processing pipelines using tools like Topaz AI and GIMM-VFI for upscaling and interpolation. These hybrid approaches allow teams to leverage the model's strengths in short-form content while addressing its limitations in extended sequences and resolution constraints.

The model's strength in dynamic camera movements and prompt adherence particularly benefits pre-visualization workflows in film and advertising. Studios report using the system for rapid concept development and directorial communication, though final production still requires traditional techniques for quality and consistency. The Apache-style licensing removes legal barriers that have complicated commercial deployment of previous open-source models.

However, the technology's current limitations—including exponential render time increases, resolution constraints, and artifact management—suggest that professional adoption will likely focus on specific use cases rather than wholesale replacement of existing pipelines. Marketing agencies experimenting with virtual influencer content and social media creators producing short-form videos represent the most immediate commercial applications.

Forward-Looking Market Analysis

Current market dynamics suggest several investment themes emerging from this development. The convergence of open-source AI capabilities with professional content creation needs may favor companies that can effectively integrate and commercialize freely available technologies rather than developing proprietary alternatives.

Infrastructure providers enabling AI model deployment and scaling could see sustained demand growth as organizations seek to implement advanced video generation capabilities. This includes specialized hardware manufacturers, cloud computing platforms, and software companies offering model optimization and deployment tools.

Traditional media and entertainment companies may need to evaluate their technology strategies, potentially shifting investment from developing internal AI capabilities toward acquiring and integrating best-of-breed open solutions. This reallocation could affect venture capital flows and merger activity within the sector.

The competitive landscape appears to favor organizations with strong execution capabilities, customer relationships, and integration expertise over those relying primarily on algorithmic advantages. As technical differentiation diminishes, business model innovation and operational efficiency become more critical success factors.

Risk Assessment and Market Outlook

While Wan-Animate represents significant technical progress, several factors could affect its market impact. The model's computational requirements limit immediate accessibility, and integration challenges may slow enterprise adoption. Regulatory responses to synthetic media capabilities remain uncertain, potentially affecting commercial deployment timelines.

However, the broader trend toward open-source AI development appears sustainable, suggesting that companies dependent on proprietary video generation algorithms may face continued pressure. Investors may benefit from monitoring competitive responses from established players and evaluating whether current market valuations adequately reflect these technological shifts.

The development underscores the rapid pace of AI advancement and the difficulty in maintaining competitive moats based solely on algorithmic capabilities. As the technology landscape continues evolving, successful companies will likely be those that can adapt quickly to leverage new capabilities while building sustainable competitive advantages through execution, customer relationships, and strategic positioning.

Disclaimer: This analysis is based on publicly available information and does not constitute investment advice. Past performance does not guarantee future results. Readers should consult with financial advisors before making investment decisions.