Google DeepMind Unveils Gemini Diffusion: A Paradigm Shift in AI Text Generation

Google DeepMind yesterday announced Gemini Diffusion, an experimental language model that applies the noise-to-signal approach of image generation to text for the first time at production scale. The breakthrough promises significantly faster text generation with improved coherence, potentially upending the dominant approach to large language models that has defined AI development for years.

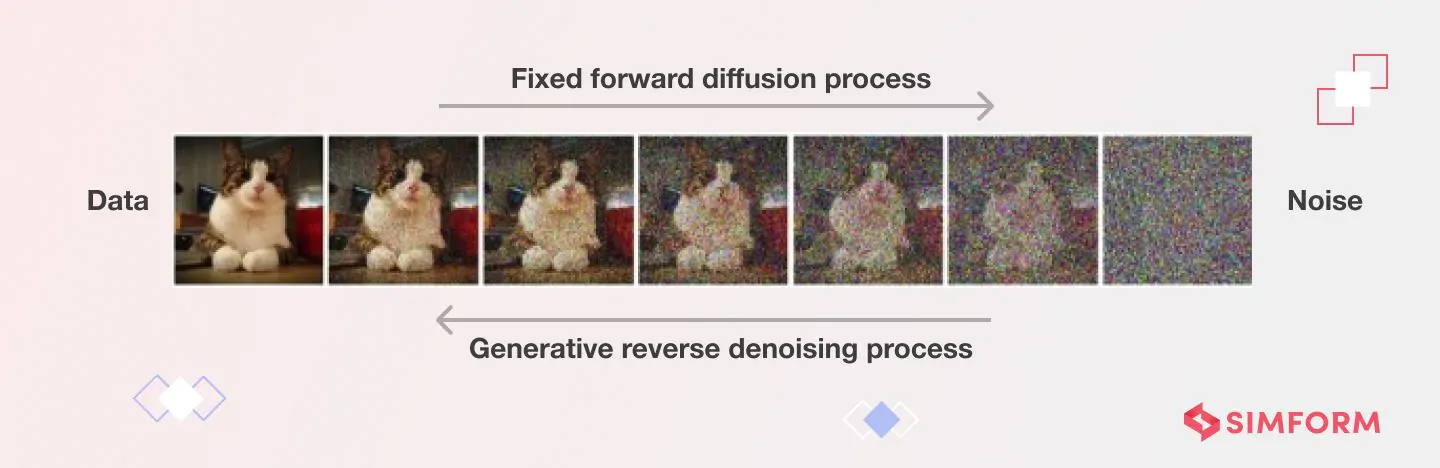

Unlike conventional autoregressive models that generate text one token at a time, Gemini Diffusion works by refining noise into coherent text through iterative steps – a process that Google claims delivers responses "significantly faster than even our fastest model so far."

"This represents a fundamental shift in how we think about language generation," said a senior AI researcher familiar with diffusion technology but not affiliated with Google. "We're seeing the potential for a 4-5x improvement in end-to-end output speed compared to similarly sized autoregressive models. That's like skipping ahead several hardware generations through software innovation alone."

Breaking the Sequential Barrier

The technical innovation behind Gemini Diffusion tackles a core limitation of current AI systems. Traditional language models like GPT-4 or previous Gemini versions work sequentially, predicting each word based on what came before. This approach, while effective, inherently limits speed and can lead to coherence issues in longer outputs.

Diffusion models take a radically different approach. Rather than building text piece by piece, they start with randomized noise and gradually refine it into meaningful content through repeated denoising steps.

"The entire process is more like sculpting than writing," explained an industry analyst who specializes in AI architectures. "The model considers the full context at every refinement stage, naturally enabling error correction and holistic coherence that's harder to achieve with token-by-token generation."

Benchmark results released by Google show that Gemini Diffusion achieves an average sampling speed of 1,479 tokens per second – a substantial improvement over previous models – though this comes with approximately 0.84 seconds of overhead for each generation.

Mixed Benchmark Performance Reveals Strengths and Limitations

Google's benchmark data reveals Gemini Diffusion's uneven but promising performance profile. The model shows particular strength in coding tasks, scoring 89.6% on HumanEval and 76.0% on MBPP – virtually identical to Gemini 2.0 Flash-Lite's scores of 90.2% and 75.8%, respectively.

However, the model shows notable weaknesses in certain areas. On the BIG-Bench Extra Hard reasoning test, Gemini Diffusion scored 15.0% compared to Flash-Lite's 21.0%. Similarly, on the Global MMLU multilingual benchmark, Diffusion achieved 69.1% versus Flash-Lite's 79.0%.

"What we're seeing is a technology that excels at tasks requiring iterative refinement, like coding, where small localized tweaks within a global context are valuable," noted a machine learning expert at a major financial institution. "The weaker performance on reasoning tasks suggests diffusion may need architectural tuning for logic-heavy applications."

Despite these limitations, Google DeepMind highlights the model's parameter efficiency, achieving comparable benchmark scores to larger autoregressive models in many domains.

Technical Challenges in Text Diffusion Model Design and Implementation

| Challenge Category | Specific Challenge | Description |

|---|---|---|

| Computational and Efficiency | Processing Demands | Requires hundreds to thousands of denoising steps, each involving a complete forward pass through a neural network |

| Latency Issues | Inference can be remarkably slow, limiting real-time applications | |

| Memory Consumption | Significant memory requirements with large intermediate feature maps during each step of reverse diffusion | |

| Text-Specific Implementation | Architecture Limitations | Cannot benefit from KV-caching due to non-causal attention computation |

| Q_absorb Transition Limitations | Denoises tokens only once, limiting ability to edit previously generated tokens | |

| Processing Inefficiencies | Masked tokens provide no information but still consume computational resources | |

| Fixed Generation Length | Major obstacle for open-ended text generation compared to autoregressive models | |

| Control and Alignment | Text Accuracy Issues | Struggle to adhere to complete set of conditions specified in input text |

| Faithfulness Problems | Often generate content with wrong meaning or details despite natural-looking output | |

| Inconsistent Outputs | Different random samples can produce vastly different results with the same prompt | |

| Text Rendering | Difficulty in rendering correct text and controlling text style in image generation | |

| Theoretical and Learning | Score Function Challenges | Performance tied to accurately learning the score function |

| Trade-off Balancing | Finding optimal balance between speed, cost, and quality remains unsolved | |

| Deployment | Resource Constraints | Limited compute throughput, memory capacity, and power budget on edge devices |

| Thermal Management | Many devices rely on passive cooling, making sustained high-throughput workloads impractical | |

| Production Integration | Handling variable latency and high memory usage complicates system integration | |

| Security Concerns | Preventing misuse requires robust safeguards that add overhead | |

| Version Control | Updates may break downstream applications when fine-tuning for specific use cases |

Editing and Refinement: A New AI Strength

Perhaps the most significant advantage of the diffusion approach is its natural aptitude for editing and refinement tasks.

"At each denoising step, the model can self-correct factual or syntactic mistakes," said a computer science professor who studies generative AI. "This makes diffusion particularly powerful for tasks like mathematical derivations or code fixes, where you need to maintain consistency across complex relationships."

This self-correction capability offers a potential solution to challenges like hallucinations and drift that have plagued large language models. By considering the entire output at each step rather than just the preceding tokens, Gemini Diffusion can maintain better coherence across longer passages.

Early Access and Future Implications

Google has opened a waitlist for developers interested in testing Gemini Diffusion, describing it as "an experimental demo to help develop and refine future models."

For professional users and investors, the implications extend far beyond a single product release. Diffusion models could fundamentally alter the AI landscape if they continue to demonstrate advantages in speed and quality.

"We're potentially seeing the beginning of a hybrid era," suggested an AI investment strategist at a major hedge fund. "The next two years might be dominated by models that combine diffusion's speed and coherence with the token-wise reasoning strengths of autoregressive approaches."

The technology appears especially promising for interactive editing tools, where users could refine AI outputs mid-generation or apply constraints dynamically. This could enable more precise control than current single-shot prompt engineering allows.

Market Implications of the Diffusion Shift

For traders and investors watching the AI space, Gemini Diffusion represents both opportunity and disruption.

"This innovation bends the cost curve for inference at scale," said a technology sector analyst. "Companies heavily invested in autoregressive-optimized infrastructure may need to pivot, while those working on editing capabilities and interactive AI experiences could see their positioning strengthened."

The announcement signals intensifying competition in the AI race, with Google leveraging its research depth to differentiate its offerings from OpenAI, Anthropic, and others. For enterprise customers, the promise of faster generation with comparable quality could significantly reduce computing costs.

However, significant barriers remain before diffusion models could become mainstream. The ecosystem of tools, safety audits, and deployment best practices for text diffusion remains far less mature than for autoregressive models. Early adopters may face integration challenges and uneven quality across domains.

"The big question is whether text diffusion is the future or just one important component of it," observed an AI governance expert. "Success will likely belong to systems that blend diffusion with token-wise reasoning, retrieval, and robust safety layers."