The $100 Billion Bet: NVIDIA and OpenAI Forge Partnership That Could Reshape AI's Future

Strategic alliance for 10-gigawatt infrastructure marks unprecedented scale in artificial intelligence computing, signaling industry shift toward energy-intensive AI factories

NVIDIA Corporation and OpenAI announced a landmark strategic partnership on Sunday that will see the chipmaker invest up to $100 billion to deploy at least 10 gigawatts of AI systems for OpenAI's next-generation infrastructure.

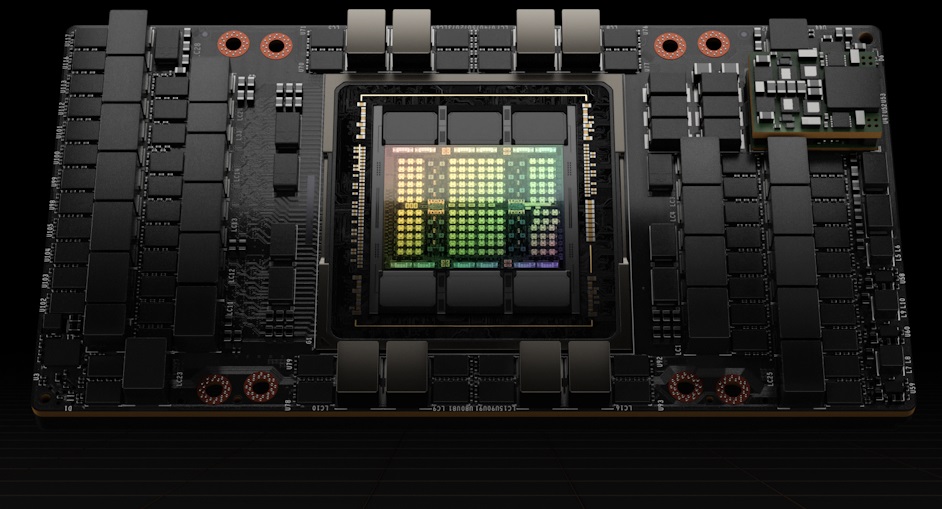

The agreement, formalized through a letter of intent, represents one of the most significant capital commitments in the AI sector's brief but explosive history. The partnership will progressively deploy millions of NVIDIA GPUs as OpenAI builds what industry experts describe as "AI factories" designed to train and operate superintelligent systems.

The first gigawatt of capacity is targeted to come online in the second half of 2026, utilizing NVIDIA's upcoming Vera Rubin platform. This initial deployment alone would consume roughly the same amount of continuous power as a large metropolitan area, highlighting the scale of infrastructure required for frontier AI development.

When Silicon Valley Meets the Power Grid

The 10-gigawatt commitment translates to approximately 87.6 terawatt-hours of annual energy consumption—equivalent to the power draw of countries like Belgium or Chile. Industry analysts estimate this infrastructure could accommodate between 5.6 and 10.4 million GPU accelerators across multiple data center campuses, representing a hardware value potentially exceeding $400 billion.

The projected 87.6 TWh annual energy consumption of the 10-gigawatt AI infrastructure compared to the annual electricity consumption of selected countries.

| Country/Infrastructure | Annual Electricity Consumption (TWh) |

|---|---|

| AI Infrastructure | 87.6 |

| Chile | 83.3 |

| Belgium | 78 |

| Norway | 135.68 |

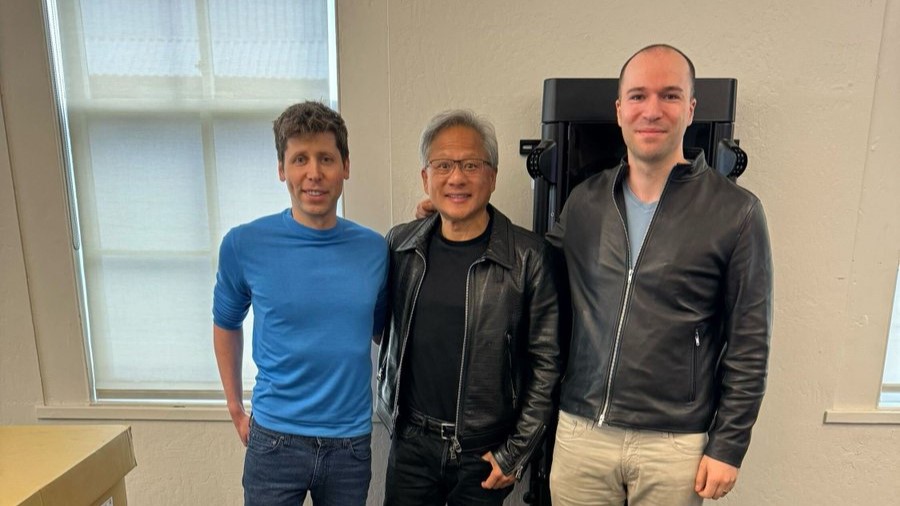

"This investment and infrastructure partnership mark the next leap forward—deploying 10 gigawatts to power the next era of intelligence," said Jensen Huang, NVIDIA's founder and CEO, in the announcement. His counterpart, OpenAI CEO Sam Altman, emphasized that "compute infrastructure will be the basis for the economy of the future."

The partnership structure reveals sophisticated financial engineering. According to sources familiar with the arrangement, NVIDIA's equity investment will be staged alongside infrastructure deployment, with approximately $10 billion committed at the first gigawatt milestone. This approach effectively transforms NVIDIA from a traditional hardware vendor into a strategic capital partner, sharing both the risks and rewards of OpenAI's ambitious scaling plans.

Breaking the Cloud Dependency Cycle

The agreement represents a strategic pivot for OpenAI, which has historically relied heavily on Microsoft's Azure cloud infrastructure following the software giant's initial $13 billion investment. By securing direct access to cutting-edge hardware through NVIDIA's partnership, OpenAI gains crucial negotiating leverage and supply chain diversification.

Vendor lock-in in cloud computing refers to a customer's dependency on a single vendor, making it difficult and costly to switch to another. This creates risks like limited flexibility and potential for increased expenses. Multi-cloud strategies are often adopted to mitigate such single provider dependencies.

Market observers note this arrangement complements rather than replaces OpenAI's existing relationships. The company continues to collaborate with Oracle through the Stargate initiative and maintains its Microsoft partnership, creating a multi-vendor approach that reduces single-source dependency risks.

"OpenAI is essentially creating vendor competition for its most critical resource," noted one industry analyst who requested anonymity due to client relationships. "This could fundamentally alter pricing dynamics in AI infrastructure."

The Great AI Infrastructure Arms Race

The NVIDIA-OpenAI partnership arrives amid a broader industry transformation toward massive AI computing deployments. Meta has publicly discussed multi-gigawatt clusters for its AI ambitions, while Elon Musk's xAI has rapidly constructed the "Colossus" supercomputer facility. Microsoft recently announced plans for its Fairwater data center in Wisconsin, involving over $7 billion in state investment.

This infrastructure scaling reflects a fundamental shift in AI development philosophy. Unlike previous computing paradigms focused on algorithmic efficiency, current AI progress increasingly depends on raw computational scale. Training more capable models requires exponentially larger GPU clusters, creating a capital-intensive barrier that favors well-funded players.

Projected capital expenditures on AI infrastructure by major tech companies, highlighting the escalating 'arms race'.

| Company | Projected AI Infrastructure Spending | Timeframe/Notes |

|---|---|---|

| Microsoft | $80 billion | Fiscal Year 2025 |

| $120 billion | Fiscal Year 2026 | |

| $100 billion | Next fiscal year (as of August 2025) | |

| Meta | $60 - $65 billion | 2025 capital expenditures |

| $66 - $72 billion | Next fiscal year (as of August 2025) | |

| $100 billion | Hinted for Fiscal Year 2026 business investment | |

| xAI | $13 billion | 2025 spending plan, includes Colossus supercomputer |

| Tens of billions | Estimated cost for Colossus 2 supercomputer | |

| Amazon | $100 billion | Projected capital expenditures for 2025 |

| $118 billion | Expected amount for 2025 expenditure (as of August 2025) | |

| Alphabet (Google) | $75 billion | Projected capital expenditures for 2025 |

| $85 billion | Expected for next fiscal year (as of August 2025) |

Energy constraints have emerged as the primary bottleneck rather than chip availability. Securing reliable, affordable power—particularly from renewable sources—has become as critical as semiconductor supply chains. The companies must navigate complex permitting processes, transmission infrastructure limitations, and environmental regulations across multiple jurisdictions.

Investment Implications Across the Technology Stack

Financial markets are likely to view this partnership as validation of several investment themes. NVIDIA's stock has already benefited from AI infrastructure demand, but this agreement provides unprecedented revenue visibility extending through 2029. The staged investment structure also positions NVIDIA to capture upside from OpenAI's potential success while mitigating downside risks.

The partnership creates ripple effects throughout the technology supply chain. High-bandwidth memory suppliers including SK Hynix, Micron, and Samsung stand to benefit from sustained demand, though pricing pressures may emerge by 2026 as production capacity expands. Advanced packaging specialists and foundry services, particularly Taiwan Semiconductor Manufacturing Company, face continued capacity constraints in supporting accelerated chip production.

Power infrastructure and renewable energy companies may experience increased investor attention as AI companies seek dedicated power arrangements. Some analysts suggest this could accelerate adoption of small modular nuclear reactors and grid-scale energy storage solutions specifically designed for high-density computing loads.

Navigating Regulatory and Technical Headwinds

The partnership faces several significant risk factors that could affect execution timelines and financial returns. Regulatory scrutiny appears increasingly likely as antitrust authorities examine the competitive implications of hardware suppliers taking equity positions in major customers while dominating market share.

Environmental concerns about energy consumption for AI training could prompt policy interventions limiting power allocations or imposing carbon pricing mechanisms. Water usage for cooling systems and land use for data center construction may face local opposition in preferred geographical markets.

Technical risks include potential breakthroughs in alternative computing architectures that could reduce NVIDIA's competitive advantages. Custom silicon developments by cloud providers or advances in quantum computing could disrupt current hardware dependencies, though such transitions typically require multiple years to materialize.

Small Modular Reactors (SMRs) are advanced, compact nuclear reactors designed for efficient, modular power generation. These units are increasingly being explored as a reliable, carbon-free energy source for high-demand applications, including powering the vast data centers critical for artificial intelligence infrastructure.

The Trillion-Dollar Question: What Comes Next

This partnership signals that AI development has entered a new phase where success depends as much on infrastructure capabilities as algorithmic innovation. The companies willing and able to commit hundreds of billions to computing infrastructure may determine which AI systems ultimately achieve widespread deployment.

Market watchers should monitor several key indicators in coming months: definitive agreement terms, power purchase agreements for specific data center locations, and evidence of similar partnerships among competing AI companies. The pace of Vera Rubin platform development and first commercial deployments will provide early signals about execution capabilities.

For investors, the NVIDIA-OpenAI partnership represents both opportunity and concentration risk. While it extends NVIDIA's AI infrastructure leadership through the decade, it also increases dependence on a single customer relationship that could face regulatory challenges or technical disruptions.

The broader implication extends beyond financial markets to questions about AI development concentration among a small number of well-capitalized entities. As infrastructure requirements grow exponentially, the number of organizations capable of training frontier AI systems may shrink, potentially affecting innovation diversity and competitive dynamics in artificial intelligence.

House Investment Thesis

| Category | Summary Details |

|---|---|

| Executive Take | A step-function verticalization where NVIDIA becomes a capital partner to lock in key AI workloads. OpenAI gains a multi-year compute runway and vendor leverage. Scale is energy-first (10 GW ≈ 87.6 TWh/year, powering ~6-10M accelerators), making power procurement the gating item. ~70% odds on a definitive deal, with timeline slippage more likely than cancellation. |

| What's New | $100B from NVIDIA, progress-based (e.g., $10B for 1 GW at a ~$500B OpenAI valuation). Total infrastructure cost up to $400B. 10 GW target, first wave on "Vera Rubin" platform starting H2 2026. The deal is not exclusive, complementing OpenAI's other plans (e.g., Stargate with Oracle) and rebalancing its reliance on Microsoft. |

| Trend vs. Exception | Trend: Multi-gigawatt AI factories are the new competitive unit (examples: OpenAI-Oracle Stargate, Microsoft Fairwater, Meta, xAI). Exception: The scale of a supplier (NVIDIA) investing equity in a customer (OpenAI) to verticalize economics and raise rivals' cost of capital. |

| Scale Math (Estimates) | Energy: 10 GW = 87.6 TWh/year. Opex: $2.6B-$8.8B/year for energy alone. Accelerators: ~5.6M-10.4M GPUs. Implied Hardware TAM: $300B-$600B cumulative industry revenue potential. |

| Economics & P&L - NVIDIA | Revenue: $300B-$500B cumulative sales from 6-9M accelerators over 3-5 years. Margin: Highly accretive, with recent GM ~72-76%; mix shift to systems may temper peak GM. Risks: Customer concentration, antitrust, supply chain, and power siting delays. |

| Economics & P&L - OpenAI | Strategic Win: Diversified compute sources, roadmap co-optimization, and vendor competition. Capital Structure: Nonprofit/Microsoft realignment plus NVIDIA equity adds a non-controlling pillar. Execution risk remains high. |

| Second-Order Beneficiaries | HBM suppliers (SK hynix, Micron, Samsung); Advanced packaging (TSMC, ASE, Amkor); Power & grid utilities; Systems OEMs & networking (e.g., Supermicro). |

| Root Causes | 1. Compute is the rate limiter for model quality. 2. Energy is the bottleneck. 3. Need for HW-SW co-design. 4. Geopolitics/onshoring incentives. |

| Risks | Regulatory: Antitrust scrutiny. Execution: Energy siting/permitting delays. Tech: Successful custom silicon or algorithmic efficiency reduces FLOP demand. Financing: Political heat from "circularity" optics. |

| Scenarios (Probabilities) | Base (55%): Definitive agreement in 3-6 months; staged rollouts with partial slippage. Bull (25%): Power siting breakthroughs, looser HBM supply, better platform performance. Bear (20%): Antitrust/power delays >18 months, OpenAI cap structure disputes. |

| What to Track Next | Signed Power Purchase Agreements (PPAs) and interconnect details; HBM capacity and pricing; Definitive deal terms (equity, governance, commitments); Delivery proof points (Rubin ship dates, cluster scale); OpenAI's cloud mix for training. |

| Positioning Thoughts | Core: NVIDIA as the index of AI compute with demand visibility through 2028-29. Picks & Shovels: Overweight HBM, packaging, power infrastructure. Hedges: Monitor custom silicon and regulatory overhangs. |

Past performance does not guarantee future results. Investors should consult financial advisors for personalized guidance based on individual circumstances and risk tolerance.